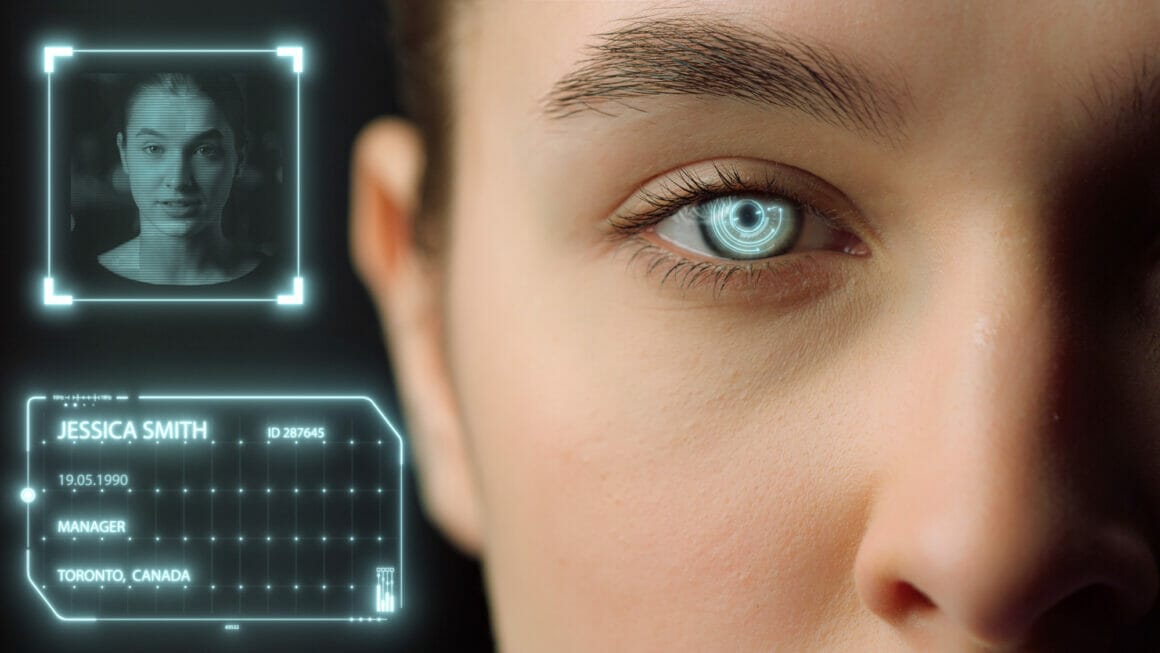

The FBI alerted companies that people are using deepfakes to apply for remote jobs. The organization wrote to its Internet Crime Complaint Center yesterday that it has received complaints from people using stolen information, deepfaked video, and voice to apply for IT remote jobs.

Deepfake consists of the video or audio of a person in which their face, body, or voice has been digitally altered, so they appear as someone else. In some cases, deepfake is so realistic that it makes it easier for people to use it maliciously, spread fake news, or in this case, get jobs with a false identity.

FBI Warns Tech Companies

According to the FBI´s announcement, companies have reported people using deepfake techniques applying to jobs via videos, images, or recordings. These fakers use personally identifiable information from other people, such as stolen identities, to apply for jobs in the IT industry. Most of the jobs are for programming, database, and software firms.

The report highlighted that many of these positions had access to customer and employee data and financial and proprietary company data, implying that fakers could be motivated by the desire to steal sensitive information.

It´s still unknown how many of these fake attempts were successful and how many were caught. According to the report, applicants used voice spoofing techniques during online interviews where lip movement didn´t match what was said during the call. Also, it was easier to identify when in some cases, the interview coughed or sneed, and the video spoofing software didn´t pick it up.